Examining Factors Influencing Behavioral Intentions in Generative AI Adoption

This research explores factors influencing U.S. college students’ attitudes and behavioral intentions toward generative AI adoption, using ChatGPT as a case study. Despite divergent discussions on its application and impacts, few studies have investigated people’s perceptions of similar technologies. Given that college students are a potential major user group and the future workforce, this research fills the gap by incorporating the Technology Acceptance Model (Davis, 1989) and the Theory of Planned Behavior (Ajzen, 1991) to explore the relationship between the DVs (attitude and intentional behaviors) and IVs (E.g., perceived usefulness, perceived ease of use, subjective norm, perceived intelligence) in ChatGPT adoption. The study utilized a pre-post single-condition experiment and found a significantly positive relationship between college students’ perceived usefulness and behavioral intentions toward ChatGPT. Even though no statistically significant relationships were found among other variables, a trend where a greater extent of agreement with the statements of the IVs corresponded to higher scores for the DVs was observed. The study contributes to the research on the Technology Acceptance Model in the realm of generative AI adoption and serves as a model for future scholars exploring the adoption of other similar AI tools or for a broader population.

With the skyrocketing advancement of artificial intelligence (AI) and natural language processing (NLP), more sophisticated large language models (LLM) and generative AI tools have emerged (Ray, 2023). Generative AI is capable of generating new data by learning patterns from existing data (Ray, 2023). Notably, ChatGPT, an AI language model developed by OpenAI, is the most prevalent and powerful one in the current market. It utilizes vast databases to generate human-like responses and perform high-level cognitive tasks, sparking diverse discussions on generative AI’s application and potential impacts across various fields since its launch (Liu et al., 2023; Shen et al., 2023). Educational institutions also have raised concerns about its possible role in facilitating student cheating, while some perceive its transformative potential in higher education. (Susnjak, 2022). However, people’s perception of similar technologies remains relatively unknown. Given that college students are a potential major user group and the future workforce, their insights into AI technologies are representative. Due to the existing gap in research on this demographic’s perspectives on AI tools, this research aims to investigate U.S. college students’ attitudes and behavioral intentions toward generative AI, using ChatGPT as an example.

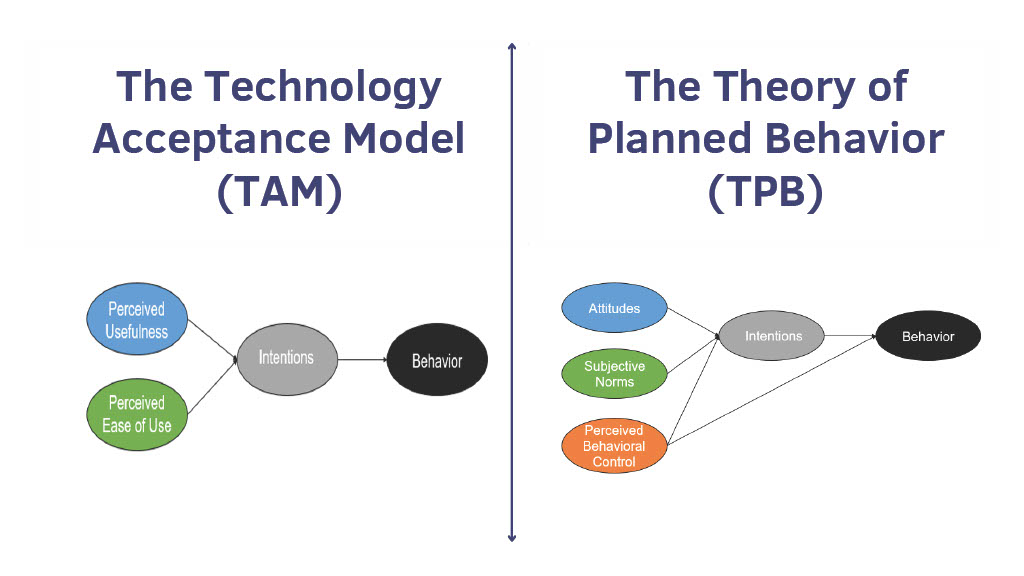

Previous scholars suggest that perceived usefulness and perceived ease of use influence people’s attitudes and behavioral intentions toward new technology in the Technology Acceptance Model (Davis, 1989). Additionally, the Theory of Planned Behavior also explores factors that determine individuals’ behavioral intentions: attitude, subjective norms, and perceived behavioral control (Ajzen, 1991). Adopting the two theoretical frameworks, the researcher will include the independent variables of perceived usefulness, perceived ease of use, and subjective norm, while the dependent variables will be people’s attitudes and behavioral intentions toward ChatGPT.

This research specifically aims to explore factors influencing U.S. college students’ attitudes and behavioral intentions toward ChatGPT through conducting a pre-post single-condition experiment. The paper will include a literature review, methodology, results, and discussion. The following literature review will discuss the developments of the two theories and how they contribute to this research design, specifically the hypothesis and methodology. Ultimately, the research will not only contribute to testing the two established theories within the context of generative AI and LLM but also potentially yield valuable insights into the future acceptance of ChatGPT and similar AI technologies among the new generation.

The Technology Acceptance Model (TAM) stands as a valuable theoretical framework developed to understand the process of users adopting new technologies, playing a pivotal place in shaping research within the realm of technology adoption. Building upon the Theory of Reasoned Action (TRA) proposed by Fishbein and Ajzen in 1975, Fred Davis introduced TAM in 1989 (Davis et al., 1989). The TRA posits that an individual's behavior is determined by their intention to perform the behavior, which is influenced by their attitude and subjective norms regarding such behavior (Fishbein & Ajzen, 1975).

TAM extends TRA by considering how behavior intention is connected to the actual behavior. It proposes that users' intention to adopt technology is shaped by their attitude toward it and influenced by their overall impression of the technology (Davis et al., 1989). The model further identifies two key factors significantly impacting an individual's intentions to use new technology: perceived usefulness (PU) and perceived ease of use (PEOU) (Davis, 1989). PU is defined as "the degree to which a person believes that using a particular system would enhance his or her job performance (Davis, 1989).” In this case, it is whether generative AI is productive and effective. David also defined PEOU as "the degree to which a person believes that using a particular system would be free of effort (Davis, 1989)." In this situation, it is whether ChatGPT is easy to learn and use for users.

Research over the past years has demonstrated the substantial impact of PEOU on PU. Previous scholars also found that PU directly affects behavioral intention (Davis, 1989). Furthermore, PEOU has both direct and indirect effects on behavioral intention through its impact on PU (Malhotra & Galletta, 1999). Overall, the TAM has been widely applied across diverse research fields and serves as a valuable theoretical framework in technology adoption.

The Theory of Planned Behavior (TPB), developed by Icek Ajzen in the 1980s, is a psychological theory that describes the relationship between an individual’s attitudes, intentions, and behaviors. It also has been widely employed across various research domains to understand and predict human intention to engage in a specific behavior.

Rooted in the Theory of Reasoned Action (TRA) as well, which posits that an individual's behavior is determined by his or her intentions, the TPB expands this notion by identifying three influencing factors (Ajzen, 1991). The first factor is attitude, which reflects how favorable or unfavorable people view a particular behavior. In the context of using generative AI like ChatGPT, this would be how favorably people view using the tool. The second factor, subjective norms, involves people's perceptions of societal expectations and pressures regarding acting the behavior. In this case, it would be people's perceptions of what others think about using ChatGPT. The third factor is perceived behavioral control, which refers to people's confidence in their ability to perform the behavior (Ajzen, 2013). In the context of using generative AI like ChatGPT, this would be people's confidence in their ability to use the tool.

The three factors together determine an individual’s intention to perform a behavior – adopting generative AI like ChatGPT in this scenario. The TPB provides valuable insights into decision-making and behavior, suggesting that individuals with a positive attitude, perceived social pressure, and confidence in their ability are more likely to intend to perform a specific behavior (Ajzen, 2002). The TPB proves to be an instrumental framework in the study of human behaviors and intentions.

Incorporating the Two Theoretical Frameworks

ChatGPT is a relatively new technology, and no existing research studies people’s attitudes and behavioral intentions toward it. The Technology Acceptance Model (TAM) and the Theory of Planned Behavior (TPB) can be helpful frameworks to investigate factors that influence people’s attitudes and behavioral intentions toward using ChatGPT, particularly among college students.

Both TAM and TPB are built on the TRA and the idea that intentional behaviors influence an individual’s behaviors. TAM suggests that people’s acceptance of new technology is primarily determined by two factors: perceived usefulness and perceived ease of use. TPB can complement TAM by considering additional factors influencing behavior intentions, such as attitudes, subjective norms, and perceived behavioral control. In addition, ChatGPT is an AI technology. When studying the adoption of AI technology, a new factor is often examined: the perceived intelligence (PI), which is “the ability to acquire and apply knowledge and skills” in the Oxford Dictionary of English. Its 5-item intellectual evaluation scale was developed by Warner and Sugarman (Warner & Sugarman,1986) in existing literature.

Generative AI, such as ChatGPT, is a relatively new technology, and few existing research studies people’s attitudes and behavioral intentions toward it. The TAM and the TPB can be helpful frameworks to investigate factors that influence people’s attitudes and behavioral intentions toward generative AI adoption, particularly among college students. In addition, another new factor that is often examined when studying the adoption of technology is perceived intelligence, which refers to “the ability to acquire and apply knowledge and skills” in the Oxford Dictionary of English. Its 5-item intellectual evaluation scale was developed by Warner and Sugarman in existing literature (Warner & Sugarman,1986).

Incorporating both approaches enables nuanced exploration of the complex interplay between users’ intentions and potential factors in Generative AI adoption. As ChatGPT is the foremost generative AI, the hypotheses will be tested on it. Therefore, the researcher set forth the following hypotheses:

H1: Perceived usefulness (IV) will have positive effects on college students’ attitude (DV) toward ChatGPT.

H2: Perceived ease of use (IV) will have positive effects on college students’ attitude (DV) toward ChatGPT.

H3: Subjective norms (IV) will have positive effects on college students’ attitude (DV) toward ChatGPT.

H4: Perceived intelligence (IV) will have positive effects on college students’ attitude (DV) toward ChatGPT.

H5: Perceived usefulness (IV) will have positive effects on college students’ behavioral intention (DV) toward ChatGPT.

H6: Perceived ease of use (IV) will have positive effects on college students’ behavioral intention (DV) toward ChatGPT.

H7: Subjective norm (IV) will have positive effects on college students’ behavioral intention (DV) toward ChatGPT.

H8: Perceived intelligence (IV) will have positive effects on college students’ behavioral intention (DV) toward ChatGPT.

H9: College students’ attitude (IV) will have positive effects on college students’ behavioral intention (DV) toward ChatGPT.

The independent variables are perceived usefulness, perceived ease of use, subjective norm, and perceived intelligence. The dependent variables are people’s attitude and behavioral intention toward ChatGPT.

Research Design

The researcher conducted an in-person pre-post single-condition experiment for this empirical research paper. This design is chosen because the study aims to explore the relationship between the independent and dependent variables. By measuring participants before and after the experiment or intervention, the researcher could assess changes in the outcome variables. In addition, conducting the experiment in -person would provide a higher level of control over the study and allow the researcher to standardize the experiment conditions. Overall, the pre-post single condition and in-person experiment would allow the researcher to measure the effectiveness of the intervention in a relatively controlled and standardized environment. The study has obtained approval from the Institutional Review Board.

Participants / Sample / Subjects

The target population for this study is college students aged 18 to 30 in the United States, including individuals of all races and genders. To ensure convenience, the experiment was conducted with college students at Stony Brook University. The researcher anticipated recruiting 100 participants based on a power analysis using the R package pwr, which showed that a minimum of 100 participants are required to detect a power of 0.80 with a small to medium effect size of d = 0.25 (Cohen, 1990).

The participants for this study were recruited using a combination of snowball sampling, in-class engagement, and social media posts. Initially, potential participants were asked to fill out a Google Form to determine their eligibility for the study. Only college students would be considered, and those who expressed interest were asked to select a timeslot for the in-person experiment within the same Google Form.

Procedure(s) / Intervention

The experiment took place at Stony Brook University in lab-setting, where participants used computers to access the pre-post surveys and interact with ChatGPT 3.5. Each participant was seated at least six feet apart to ensure privacy and prevent interference with others’ participation. The location chosen for the experiment was the lower newsroom at the library, which was equipped with multiple computers and relevant survey administration software to facilitate the smooth running of the experiment.

The procedure for the experiment involved multiple sessions, each with a maximum of 10 participants and lasting between 10 to 15 minutes. At the start of each session, participants initially took a pre-survey on Qualtrics, an online survey platform, to measure their attitudes and behavioral intentions towards ChatGPT. This pre-survey should take around three to five minutes to complete.

After completing the pre-survey, participants were then instructed to interact with ChatGPT by inputting the prompts adapted from a previous research study (Jungherr, 2023) and observing its performance. There were four sets of prompts, and participants were randomly assigned with one set comprising four questions. Each set adhered to the same question structure but differed in the topic: gun control, nuclear war, digital misinformation, and climate change.

After participants interacted with ChatGPT and observed its performances, they ended with a post-survey. It measures the DVs (attitude and behavioral intention) and IVs (E.g., perceived usefulness, perceived ease of use, perceived intelligence, subjective norm, demographics, and covariates) for another five minutes.

The primary intervention in this study was the use of the prompts designed for ChatGPT. These prompts were intended to elicit specific responses from the participants that would enable the researcher to measure the variables.

Instruments / Measures

The variables were measured on the Qualtrics. Each variable had its own questionnaire section.

-

Dependent Variables

Attitude was measured by using a 6-item measurement scale adopted from Davis

(1989). Sample items include: “I am impressed by what ChatGPT can do,” “ChatGPT saves people’s time,” and “ChatGPT can perform better than humans.” Responses to all questions were rated on a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree), with higher scores indicating greater agreement with the question. The items before the intervention were averaged into a composite score (M = 5.22, SD = 1.057, α = 0.81). The items after the intervention were averaged into a composite score (M = 5.06, SD = 0.948, α = 0.81).

Behavioral intention was measured using a 6-item measurement scale adopted by

Davis (1989). Sample items include: “I intend to keep using ChatGPT,” “I intend to use

ChatGPT in the near future,” and “I am interested in using ChatGPT in my daily life.” Responses to all questions were rated on a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree), with higher scores indicating greater agreement with the question. The items before the intervention were averaged into a composite score (M = 4.98, SD = 1.557, α = 0.95). The items after the intervention were averaged into a composite score (M = 5.30, SD = 1.364, α = 0.96).

-

Independent Variables

Perceived usefulness was measured using a 6-item measurement scale adopted by

Davis (1989). Sample items include: “I would find ChatGPT useful in my academics and job,” “using ChatGPT would make it easier to do my job,” and “using ChatGPT would enhance my effectiveness on the tasks.” Responses to all questions were rated a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree), with higher scores indicating greater agreement with the question. The items were averaged into a composite score (M = 6.15, SD = 0.780, α = 0.95).

Perceived ease of use was measured using a 6-item measurement scale adopted by Davis (1989). Sample items include: “I would find ChatGPT easy to use,” “Learning to use

ChatGPT is easy for me,” and “I would find ChatGPT to be flexible to interact with.” Responses to all questions were rated a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree), with higher scores indicating greater agreement with the question. The items were averaged into a composite score (M = 5.07, SD = 1.410, α = 0.92).

Subjective norm was measured with five questions according to Ajzen (1991).

Sample items include: “It is expected of me to use ChatGPT,” “I feel under social pressure to use

ChatGPT,” and “Most people who are important to me think I should use ChatGPT.” Responses to all questions were rated on a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree), with higher scores indicating greater agreement with the question. The items were averaged into a composite score (M = 4.01, SD = 1.460, α = 0.88).

Perceived intelligence was measured using a 5-item intellectual evaluation scale developed by Warner and Sugarman (Warner & Sugarman,1986). Responses to all questions were measured in a semantic differential scale: incompetent & competent; unknowledgeable & knowledgeable; irresponsible & responsible; unintelligent & intelligent; insensible & sensible. The items were averaged into a composite score (M = 5.78, SD = 0.782, α = 0.79).

Attention was also added as the independent variable because participants’ attention paid to ChatGPT’s responses during the intervention might also influence their perceptions of

ChatGPT. Attention was measured by how much attention you paid to ChatGPT’s responses.

The responses were rated on a 5-point Likert scale ranging from 1 (None) to 5 (A great deal). The items were averaged into a composite score (M = 3.48, SD = 1.050).

-

Demographics Variables

There were also demographic variables such as age, gender, race/ethnicity, and socioeconomic status (income). There was also a questionnaire for political ideology, which was measured with the question, “how would you describe your political orientation?”. The responses were rated on a 5-point Likert scale ranging from 1 (liberal) to 5 (Conservative). The items were averaged into a composite score (M = 2.24, SD = 0.970).

Covariates

Two covariates were also measured because news consumption and frequency of

ChatGPT use might influence participants’ perception of ChatGPT before the intervention.

Consumption of news about ChatGPT was measured with the question: “How much have you read about news about ChatGPT in the past 30 days?” The responses were chosen among multiple choices such as “never,” “1-3 times”, “4-6 times”, “7-9 times”, and “equal and more than 10 times”. The items were averaged into a composite score (M = 3.16, SD = 1.340).

Frequency of ChatGPT Use was measured with the question: “How often did you use

ChatGPT in the past 30 days?” The responses were chosen among multiple choices such as

“never,” “occasionally,” “weekly,” and “daily.” The items were averaged into a composite score (M = 4.92, SD = 2.430).

-

Jamovi for Data Analysis

Jamovi was used for data analysis. Reliability (Cronbach’s alpha) for all variables was initially assessed, and items that could enhance alpha were retained. Mean scores for each variable were calculated, followed by a paired-sample t-test to compare pre-and-post attitude and behavioral intention. The study also employed multiple regression analysis to explore two relationships: 1) the connection between the DVs (post-intervention behavioral intention) and the IVs (perceived usefulness, perceived ease of use, subjective norms, perceived intelligence, pre-intervention behavioral intention, post-intervention attitude, and demographics), and 2) the association between the DV (post-intervention attitude) and the IV (perceived usefulness, perceived ease of use, pre-intervention attitude, perceived intelligence, and demographics). Furthermore, a correlation table for key variables was generated.

Given the post-pandemic circumstances, collecting data within a short timeframe posed challenges. In the end, there were 25 participants with no missing values.

The results indicated that the mean attitude score before the intervention (M = 5.22, SD = 1.057, α = 0.81) is 0.156 higher than the mean attitude score after the intervention (M = 5.06, SD = 0.948, α = 0.81). It indicates that the change in mean attitude scores before and after the intervention is not statistically significant (t = 1.05, p = 0.303). The effect size is 0.210, suggesting a small effect.

The mean score for behavioral intention before the intervention (M = 4.98,

SD = 1.557, α = 0.95) is 0.312 lower than the mean score for behavioral intention after the intervention (M = 5.30, SD = 1.364, α = 0.96); this indicates a statistically significant difference (t = -2.70, p = 0.012). The effect size, represented by Cohen’s d, is -0.540, suggesting a moderate effect.

H1 hypothesized that perceived usefulness would positively impact college students’ attitude toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=0.06(0.15), p=0.681).

H2 hypothesized that perceived ease of use would have positive effects on college students’ attitude toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=0.08(0.26), p=0.783).

H3 hypothesized that subjective norm would have positive effects on college students’ attitude toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)= 0.07(0.20), p=0.780).

H4 hypothesized that perceived intelligence would have positive effects on college students’ attitude toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=0.05(0.23), p=0.476).

H5 hypothesized that perceived usefulness would have positive effects on college students’ behavioral intention toward ChatGPT, and the result supported this hypothesis, revealing a statistically significant positive relationship between perceived usefulness and behavioral intention (b(SE)=0.24 (0.11), p=0.040).

H6 hypothesized that perceived ease of use would have positive effects on college students’ behavioral intention toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=- 0.04(0.10), p=0.174).

H7 hypothesized that subjective norm would have positive effects on college students’ behavioral intention toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=- 0.05(0.11), p=0.274).

H8 hypothesized that perceived intelligence would have positive effects on college students’ behavioral intention toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=- 0.06(0.14), p=0.476).

H9 hypothesized that College students’ attitude would have positive effects on college students’ behavioral intention toward ChatGPT, and the results showed that the relationship is not statistically significant (b(SE)=0.11(0.13), p=0.410).

In addition, the researcher did not find that participants’ attention paid to ChatGPT’s response, demographic variables, or news consumption on ChatGPT’s had positive effects on college students’ attitude or behavioral intention toward ChatGPT as each relationship is not statistically significant as well.

This study investigated college students’ attitude and behavioral intention toward the adoption of generative AI, using ChatGPT as an example through a pre-post and in-person experiment. Overall, the results revealed a significantly positive relationship between college students’ perceived usefulness and behavioral intention toward ChatGPT. Even though no statistically significant relationships were found among other variables, the researcher still observed a trend where a greater extent of agreement with the statements of the IVs corresponded to higher scores for the DVs.

While the findings contribute to our understanding of the Technology Acceptance Model

by demonstrating the statistically significant relationships between perceived usefulness and behavioral intention toward ChatGPT, they also open avenues for future research to explore additional factors influencing college students’ attitudes and behavioral intentions regarding the adoption of generative AI. Future researchers are encouraged to examine other generative AI tools, such as Bing AI or Google Bard, to broaden our understanding of user perceptions and adoptions in the evolving landscape of AI.

However, it’s essential to note the limitations of the study due to the small sample size and potential flaws in the experiment’s design. A larger sample is needed to test meaningful reports based on a power analysis using the R package pwr. As at least 100 participants are expected, the data is still being collected. Future research can enhance the study by recruiting larger samples, improving questionnaire depth, and employing more neutral prompts for manipulation. Testing the two theories on general adult or larger public samples is also recommended.

To sum up, this study explores college students’ attitudes and behavioral intentions toward ChatGPT using a pre-post single-condition experiment grounded in the Technology Acceptance Model and The Theory of Planned Behavior. The results demonstrated a moderate increase in participants’ mean behavioral intention after interacting with ChatGPT. Additionally, while this study has its limitations, the findings reveal a statistically significant relationship between perceived usefulness and behavioral intention toward ChatGPT.

The findings contribute insights to the Technology Acceptance Model in the realm of generative AI adoption and offer a glimpse into college students’ perceptions of generative AI. These results encourage future researchers to explore additional factors that may influence the public’s perceptions of the potential benefits or limitations of AI language models like ChatGPT. As data collection is ongoing, the research design also serves as a model for future scholars conducting similar investigations in the ever-changing realm of generative AI on a broader scale.

Ajzen, I. (1991). The theory of planned behavior. Organizational behavior and human decision processes, 50(2), 179-211.

Ajzen. (2002). Perceived Behavioral Control, Self-Efficacy, Locus of Control, and the Theory of Planned Behavior. Journal of Applied Social Psychology, 32(4), 665–683. https://doi.org/10.1111/j.1559-1816.2002.tb00236.x

Ajzen, I., & Sheikh, S. (2013). Action versus inaction: Anticipated affect in the theory of planned behavior. Journal of applied social psychology, 43(1), 155-162.

Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management science, 35(8), 982-1003.

Davis, F. D. (1989). Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008

Fishbein, M., & Ajzen, I. (1977). Belief, attitude, intention, and behavior: An introduction to theory and research.

Kitamura, F. C. (2023). ChatGPT is shaping the future of medical writing but still requires human judgment. Radiology, 230171.

Liu, Y., Han, T., Ma, S., Zhang, J., Yang, Y., Tian, J., ... & Ge, B. (2023). Summary of ChatGPT-Related Research and Perspective Towards the Future of Large Language Models. Meta-Radiology, 100017.

Malhotra, Y., & Galletta, D. F. (1999, January). Extending the technology acceptance model to account for social influence: Theoretical bases and empirical validation. In Proceedings of the 32nd Annual Hawaii International Conference on Systems Sciences. 1999. HICSS-32.Abstracts and CD-ROM of Full Papers (pp. 14-pp). IEEE.

Susnjak, T. (2022). ChatGPT: The End of Online Exam Integrity?. arXiv preprint arXiv:2212.09292.

Shen, Heacock, L., Elias, J., Hentel, K. D., Reig, B., Shih, G., & Moy, L. (2023). ChatGPT and Other Large Language Models Are Double-edged Swords. Radiology, 230163–230163. https://doi.org/10.1148/radiol.230163

Warner, R. M., & Sugarman, D. B. (1986). Attributions of personality based on physical appearance, speech, and handwriting. Journal of personality and social psychology, 50(4), 792.

Jungherr, A. (2023). Using ChatGPT and Other Large Language Model (LLM) Applications for Academic Paper Assignments.

Ray, P. P. (2023). ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber- Physical Systems.