Including people with disabilities in the process of tech development starts by addressing technoableism

September 6, 2022

Surrounded by researchers in a lab, Abraham Glasser takes the team through an automated sign language recognition program. He takes pride in working on a project where the vastness of deaf culture is reflected in the technology for people with hearing disabilities.

Frustrated with attention-grabbing developments, like the Amazon Alexa that claimed to understand sign language, Glasser said these “celebratory moments are actually almost offensive” when new tech systems do not work for those who are hard of hearing, like himself.

Many projects that aim to develop American Sign Language recognition fail to do so because deaf or hard of hearing people are not included in the process, Glasser signed. Since disability populations are diverse, specific signing styles vary across different backgrounds, making technology inaccurate and unreadable without proper federal regulations in place to follow.

In 2010, the U.S. Department of Justice revised the Americans with Disabilities Act (ADA) Standards for Accessible Design, which mandates that all electronic and information technology must be accessible to people with disabilities. Commercial and public entities, which include the internet, need to meet ADA standards under the law. However, the Department of Justice has yet to develop specific accessibility guidelines, and meeting the edict has since been self-regulated. Instead, organizations are merely encouraged to follow the internationally recognized Web Content Accessibility Guidelines (WCAG).

Differing from the ADA, Section 508 of the Rehabilitation Act of 1973 requires federal agencies to make information communication technology, including software, websites, hardware, and documents, accessible to people with disabilities.

Accessibility and designing technologies for people with disabilities, known as assistive technology, is about more than just compliance with these standards, according to disability advocates and developers. With the drive to innovate, developments in artificial intelligence (AI) have rippled into new forms that are more than just self-driving cars and image recognition software to unlock the latest iPhone. As advancements in technology have revolutionized accessibility in recent years, developers and disability advocates alike are approaching AI systems all while situating disability at the center of design. Because disabilities and the technologies for disabled populations are diverse, what informs the designing principles of disability tech developers and researchers varies.

Researchers, like Glasser, who is a Ph.D. student at Rochester Institute of Technology, believe that because the range of disabilities is so diverse — over 1 billion people worldwide have a disability — not every development in assistive technology can adequately address the needs of members from the entire community. And others are completely left out.

“It’s really important to include the population in the research and development and not do it in a performative way,” Glasser signed.

In the form of what he calls a “Wizard of Oz” experiment — where a user interacts with a computer system that is being operated by someone behind the scenes — Glasser re-envisions voice command personal assistants, like Google Voice and Alexa, for deaf and people hard of hearing who use sign language to communicate. The process, he signed, requires an unseen interpreter to be the voice for the deaf user by understanding their signing and then assigning their commands to Alexa.

“We deaf folks try to express our opinions in the academic research world,” Glasser signed. “We have to work harder and have the credentials behind our name … to try to dismantle disinformation.”

Tech companies, like Microsoft, approach disability by claiming to embrace what is called “inclusive design,” which understands that their users are diverse and that the performance of a given product should reflect the needs and experiences of everyone. Designing for people with disabilities can take two forms: the first being a system that assists in functional capabilities, like mobility and hearing aids. The second being accessibility technology that are tools, such as screen readers and automated captioning, that allow people to use and interact with an existing technology.

Like Glasser’s research project, many up-and-coming developments in tech spaces are centered around AI. Microsoft, for instance, created an app called Seeing AI to allow people who are blind or low vision to use the cameras on their phones to recognize objects that could help with tasks like identifying currency.

Machine-learning AI can lead to an array of ethical issues as the data that is used to model from normalizes able-bodied populations, said Ed Cutrell, senior research manager at Microsoft’s Ability Team. In the same way developers neglect to account for the different types of signing from underrepresented backgrounds in sign language recognition software, unintended consequences arise when self-driving cars were not developed to acknowledge wheelchair users.

The Ability Team works directly with the disability community to build a database specifically focusing on people who have visual impairments. The data being collected is about the objects that they care about in their environment. That way, Cutrell said, his team can accurately assess object recognition systems (like Seeing AI)— software that is taught how to detect objects within a room or outdoor space.

“Within the technology world, accessibility or assistive technology is kind of an afterthought, or it’s something that’s thought of as a regulatory overhead,” he said. “The government says we’ve got to do this, so we’re going to do it, as opposed to why it’s useful and important in itself.”

Aside from just relying on Section 508 guidelines, technology company IBM launched the Equal Access Toolkit as a guide for the industry on how to create accessible products and services for designers and testers under Section 508, WCAG 2.1, and EN 301 549, a set of standards issued in Europe.

In terms of AI, Shari Trewin, IBM’s accessibility manager, said that there is an explosion of possibilities for how it can be used to build assistive technologies. But she said that the ethical issues surrounding them need to be addressed.

Unlike the requirements that regulate web accessibility software, there are no federal standards for how to design technologies using AI. A way to improve future design processes is to implement accessible component libraries — cloud-based folders containing blueprints to websites/software — that are open to the public for developers to have the building tools they need to assess their designs, she said.

One of the trade-offs to integrating AI in technology designed for people with disabilities is balancing privacy with its capability to perform accurately and effectively. This could be the case for image recognition systems that try to describe people in an environment to a user who cannot see them, Trewin said.

Many disability rights advocates in the field of technology have demonstrated that a crucial drawback to a system that is presented as universal is that it cannot accurately recognize people and objects within historically underrepresented populations. The National Institute of Standards and Technology released a report in 2019 that found racial biases within these algorithms as the image recognition system works best at identifying middle-aged white men’s faces, and not so well for people of color. In the case of having someone with a vision disability use this type of technology, they would be fed inaccurate information about their surroundings — rendering the product unusable.

Companies have created platforms, like IBM’s AI Fairness 360 Toolkit, that provide a range of fairness measures and techniques that can be used to identify and mitigate bias.

“There’s another concern, which is if the data that you’re feeding into a system is data from a situation where there was already bias and unfair treatment,” Trewin said, “then an AI system might learn that bias and perpetuate it.”

Microsoft, IBM, AI Now Institute, World Institute on Disability, and countless other initiatives discuss these challenges that arise when incorporating AI to enhance technology. Ultimately, many researchers and disability rights advocates argue that to reach algorithmic fairness while ensuring a product works, AI systems need to gather training data from broad sets of groups and be transparent about how they justify reasonings for specific results. That way, the people using them can understand the system’s solutions.

On the flip side, advocates want developers to consider what those solutions mean for people with disabilities if the technology does not fulfill the stated needs or goals of disabled people.

Lydia X. Z. Brown, a disability rights advocate and attorney, works at the Center for Democracy and Technology where they approach the relationship between disability rights and AI through the lens of justice rather than fairness. Brown said that because people live in an already unfair society that is racist, sexist, and ableist, centering discussions on algorithmic fairness is the wrong way of addressing the problem. Instead, people need to frame issues surrounding new technologies with a social justice perspective, Brown said. That way, people can see how ableism already manifests in AI technologies and developers can, in turn, create systems with “just purposes.”

When learning about new research and designs in assistive technology, Brown said a common theme was that they were created with the idea that idolizes non-disabled people’s functions, movements, speech, and communication, while therapizing people with disabilities.

“I’m autistic, and we don’t need to learn ‘social skills’ in this pathologising way,” Brown said. “Suddenly, when people talk about autistic people, there’s an assumption that the ways we socialize and communicate are wrong or defective.”

When thinking about technology for autistic people, developers come up with technologies that “will help fix or therapize autistic people,” Brown said.

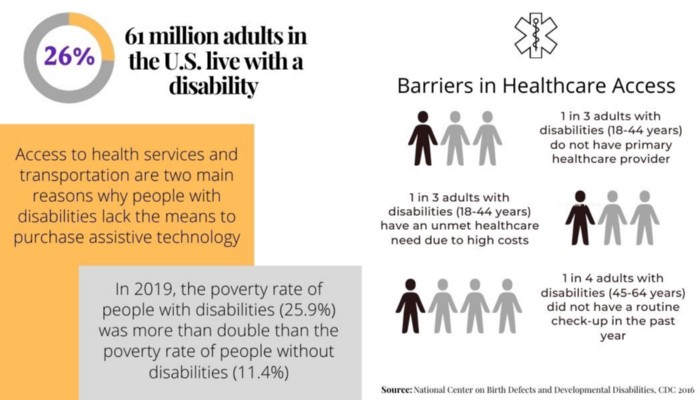

One of the root causes for creating technologies that end up doing more harm than good is that developers typically operate under the belief that they know what is best for their disabled users, Brown said. Time and resources are being invested in such technologies when, in reality, people with disabilities face other challenges that are not receiving enough attention. This includes having access to medical care or the financial means of obtaining assistive technologies.

An important idea to recognize, Brown said, is that accessibility cannot be automated. They are skeptical about pioneering technologies that can somehow use AI to automate access when existing technologies fail to meet WCAG 2.0 guidelines, to begin with. Working virtually during the COVID-19 pandemic and using programs like Slack, Zoom, and Google Meet illuminated the shortcomings of accessibility features, like automated captioning, when people with disabilities found the software inaccessible, said Brown.

According to the 2020 Web Accessibility Annual Report, 98% of U.S.-based webpages are not accessible to the disability community.

“The question is whether and to what extent we should be trying to automate access, and whether and to what extent access is possible to achieve within automated applications, to begin with,” Brown said.

Tackling how ableism permeates in the tech field, Hanna Herdegen, disability studies scholar at Virginia Tech., said that technology fails people with disabilities because they are not involved in the design process. If they were, they would get a greater say over what they want and need to be developed rather than spending all this time and money developing a product that is useless to most people in the community, she said.

“It ends up being both useless, but then also there’s this sort of history in the disabled community of either — you do exactly what the doctors or the technologists are telling you to do, or you’re a bad disabled person, in the sense of like, you’re not performing disability correctly,” Herdegen said.

Os Keyes, a Ph.D. student at the University of Washington’s Department of Human Centered Design & Engineering, also agrees that AI systems are framed to always work because there is a team of researchers and scientists who are backing the deployment of such technologies.

Brown, Herdegen, and Keyes hold the same view that disability justice runs much deeper than having tech companies attempt to solve the needs around disability and create a more equal world by using superhuman machines.

“We don’t need AI to know what most disabled people need and want,” Keyes said. “I see a lot more AI work aimed at applying technical solutions to social problems than technical solutions to medical problems.”

By implementing disability justice-oriented training for doctors and developers instead of throwing in AI as an attractive problem-solver, Keyes said, “we can then see a substantive difference in ameliorating ableist practices in tech development.”

Many companies have put together codes of ethics to inform designers of AI systems about the responsibilities and consequences of putting such technologies into practice. Take Microsoft, Bosch and IBM. All, if not most, touch on “fairness,” “accountability” and “transparency.”

Keyes proposes that these conventional ethical frameworks need to be rejected altogether because they silence the everyday lives of people with disabilities.

Sam Proulx, community lead at Fable, said — being completely blind himself — having diversity within accessibility is important to him, so his work centers on accessibility testing in emerging tech.

“It isn’t just a list of things to check off — the sort of check once every five years, and then you’re done. Accessibility is a process and a journey that requires modification to your systems in order to get it right,” Proulx said.

Originally published in April 2021.